Cybercriminals to feel less guilty, blame AI they created to do the malicious job

Kaspersky expert shared his analysis on the possible Artificial Intelligence (AI) aftermath, particularly the potential psychological hazard of this technology.

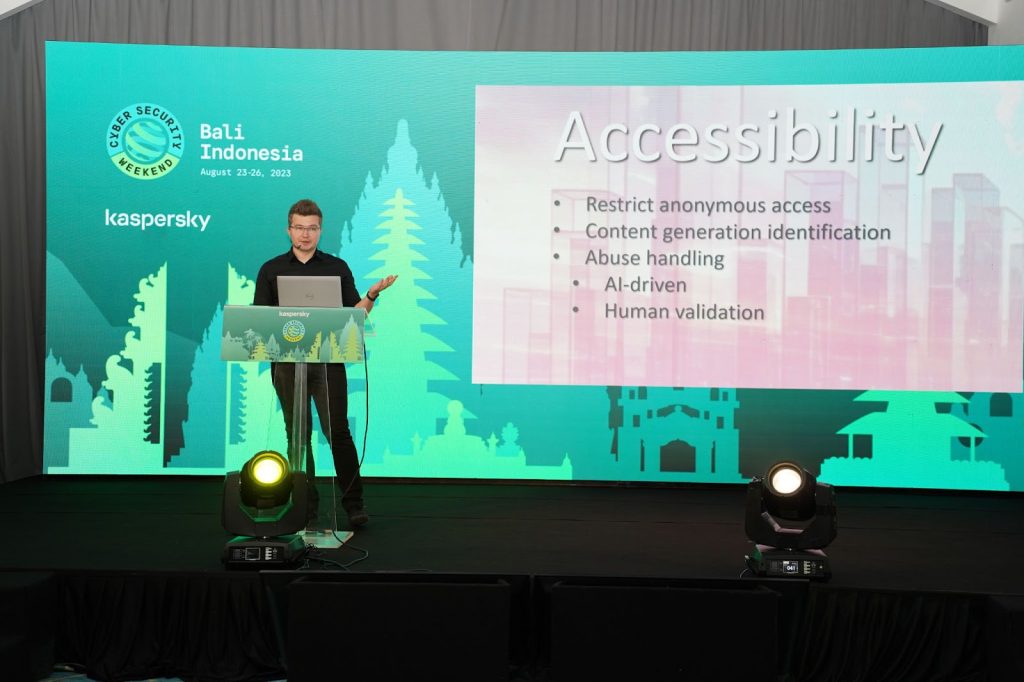

Vitaly Kamluk, Head of Research Center for Asia Pacific, Global Research and Analysis Team (GReAT) at Kaspersky, revealed that as cybercriminals use AI to conduct their malicious actions, they can put the blame on the technology and feel less answerable for the impact of their cyberattacks.

This will result in “suffering distancing syndrome”.

Vitaly Kamluk, Head of Research Center for Asia Pacific, Global Research and Analysis Team (GReAT) at Kaspersky

“Other than technical threat aspects of AI, there is also a potential psychological hazard here. There is a known suffering distancing syndrome among cybercriminals. Physically assaulting someone on the street causes criminals a lot of stress because they often see their victim’s suffering. That doesn’t apply to a virtual thief who is stealing from a victim they will never see. Creating AI that magically brings the money or illegal profit distances the criminals even further, because it’s not even them, but the AI to be blamed,” explained Kamluk.

Another psychological by-product of AI that can affect IT security teams is “responsibility delegation”. As more cybersecurity processes and tools become automated and delegated to neural networks, humans may feel less responsible if a cyberattack occurs, especially in a company setting.

“A similar effect may apply to defenders, especially in the enterprise sector full of compliance and formal safety responsibilities. An intelligent defense system may become the scapegoat. In addition, the presence of a fully independent autopilot reduces the attention of a human driver,” he added.

Kamluk shared some guidelines to safely embrace the benefits of AI:

- Accessibility – We must restrict anonymous access to real intelligent systems built and trained on massive data volumes. We should keep the history of generated content and identify how a given synthesized content was generated.

Similar to the WWW, there should be a procedure to handle AI misuses and abuses as well as clear contacts to report abuses, which can be verified with first line AI-based support and, if required, validated by humans in some cases.

- Regulations – The European Union has already started discussion on marking the content produced with the help of AI. That way, the users can at least have a quick and reliable way to detect AI-generated imagery, sound, video or text. There will always be offenders, but then they will be a minority and will always have to run and hide.

As for the AI developers, it may be reasonable to license such activities, as such systems may be harmful. It’s a dual-use technology, and similarly to military or dual-use equipment, manufacturing has to be controlled, including export restrictions where necessary.

- Education – The most effective for everyone is creating awareness about how to detect artificial content, how to validate it, and how to report possible abuse.

Schools should be teaching the concept of AI, how it is different from natural intelligence and how reliable or broken it can be with all of its hallucinations.

Software coders must be taught to use technology responsibly and know about the punishment for abusing it.

“Some predict that AI will be right at the center of the apocalypse, which will destroy human civilization. Multiple C-level executives of large corporations even stood up and called for a slowdown of the AI to prevent the calamity. It is true that with the rise of generative AI, we have seen a breakthrough of technology that can synthesize content similar to what humans do: from images to sound, deepfake videos, and even text-based conversations indistinguishable from human peers. Like most technological breakthroughs, AI is a double-edged sword. We can always use it to our advantage as long as we know how to set secure directives for these smart machines,” added Kamluk.

Kaspersky will continue the discussion about the future of cybersecurity at the Kaspersky Security Analyst Summit (SAS) 2023 happening in Phuket, Thailand, from 25th to 28th October.

This event welcomes high-caliber anti-malware researchers, global law enforcement agencies, Computer Emergency Response Teams, and senior executives from financial services, technology, healthcare, academia, and government agencies from around the globe.

Interested participants can know more here: https://thesascon.com/#participation-opportunities.

About Kaspersky

Kaspersky is a global cybersecurity and digital privacy company founded in 1997. Kaspersky’s deep threat intelligence and security expertise is constantly transforming into innovative solutions and services to protect businesses, critical infrastructure, governments and consumers around the globe. The company’s comprehensive security portfolio includes leading endpoint protection, specialized security products and services, as well as Cyber Immune solutions to fight sophisticated and evolving digital threats. Over 400 million users are protected by Kaspersky technologies and we help over 220,000 corporate clients protect what matters most to them. Learn more at www.kaspersky.com.